Introduction

This article will introduce how GOP managed LVS-NAT/LVS-DR/LVS-TUN with Keepalived. GOP used to choose LVS-NAT but the performance is very low, then GOP changed to LVS-DR, it was quite stable for a long time until GOP needed to consider about how to across the subnet. After numerous times of failure GOP created a hybrid model that cloud use LVS-DR and LVS-TUN in one group, now we want to share the method with you.

What is LVS?

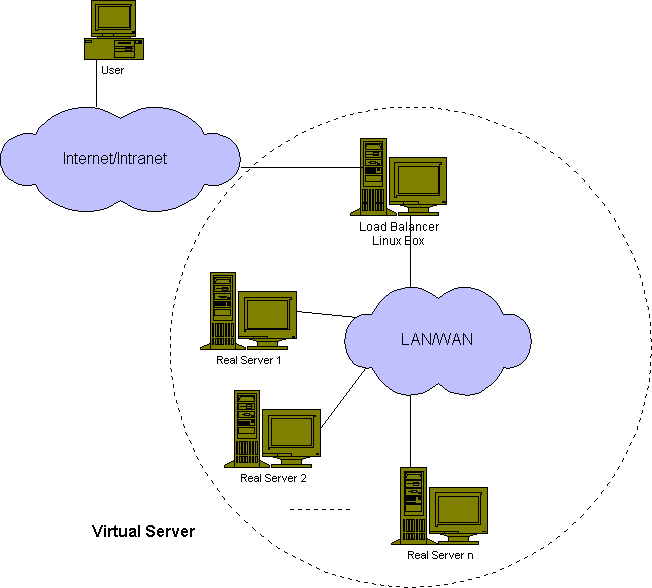

Virtual server is a highly scalable and highly available server built on a cluster of real servers. The architecture of server cluster is fully transparent to end users, and the users interact with the cluster system as if it were only a single high-performance virtual server. Please consider the following figure.

The real servers and the load balancers may be interconnected by either high-speed LAN or by geographically dispersed WAN. The load balancers can dispatch requests to the different servers and make parallel services of the cluster to appear as a virtual service on a single IP address, and request dispatching can use IP load balancing technolgies or application-level load balancing technologies. Scalability of the system is achieved by transparently adding or removing nodes in the cluster. High availability is provided by detecting node or daemon failures and reconfiguring the system appropriately.

What is Keepalived ?

Keepalived is a routing software written in C. The main goal of this project is to provide simple and robust facilities for loadbalancing and high-availability to Linux system and Linux based infrastructures. Loadbalancing framework relies on well-known and widely used Linux Virtual Server (IPVS) kernel module providing Layer4 loadbalancing. Keepalived implements a set of checkers to dynamically and adaptively maintain and manage loadbalanced server pool according their health. On the other hand high-availability is achieved by VRRP protocol. VRRP is a fundamental brick for router failover. In addition, Keepalived implements a set of hooks to the VRRP finite state machine providing low-level and high-speed protocol interactions. In order to offer fastest network failure detection, Keepalived implements BFD protocol. VRRP state transition can take into account BFD hint to drive fast state transition. Keepalived frameworks can be used independently or all together to provide resilient infrastructures.

How LVS works?

Now virtual server is implemented in three ways. There are three IP load balancing techniques (packet forwarding methods) existing together in the LinuxDirector. They are virtual server via NAT, virtual server via IP tunneling and virtual server via direct routing.

Here’s a typical LVS-NAT setup.

________

| |

| client | (local or on internet)

|________|

|

(router)

DIRECTOR_GW

|

-- |

L Virtual IP

i ____|_____

n | | (director can have 1 or 2 NICs)

u | director |

x |__________|

DIP

V |

i |

r -----------------+----------------

t | | |

u | | |

a RIP1 RIP2 RIP3

l ____________ ____________ ____________

| | | | | |

S | realserver | | realserver | | realserver |

e |____________| |____________| |____________|

r

v

e

r

Here is a typical LVS-DR or LVS-Tun setup.

________

| |

| client | (local or on internet)

|________|

|

(router)-----------

| SERVER_GW |

-- | |

L VIP |

i ____|_____ |

n | | (director can have 1 or 2 NICs)

u | director | |

x |__________| |

DIP |

V | |

i | |

r -----------------+----------------

t | | |

u | | |

a RIP1,VIP RIP2,VIP RIP3,VIP

l ____________ ____________ ____________

| | | | | |

S | realserver | | realserver | | realserver |

e |____________| |____________| |____________|

r

v

e

r

For director failover (covered in the LVS-HOWTO), the VIP and DIP will be moved to a backup director. These two IPs then cannot be the primary IP on the director NIC(s), i.e. they must be secondary IPs (or in the old days were called aliases). The primary IP’s on the director NIC(s) can be anything compatible with the network.

Any setup procedure (including the configure script) will assume that you have already setup all IPs and network connections, apart from the VIP,DIP: i.e. setup RIP1…n, the primary IPs on the director NIC(s) and the DIRECTOR_GW/SERVER_GW and that the link layer joining these IPs is functioning. The configure script will setup the VIP, DIP (0.9.x will set them up on aliases, hopefully later versions will set them up on secondary IPs).

You will need a minimum of 3 machines (1 client, 1 director, 1 realserver). If you have 4 machines ( i.e. 2 realservers), then you can see loadbalancing (the connection from the client to the LVS being sent to one realserver, then the other).

You can setup an LVS with 2 machines (1 client, 1 director) using the localnode feature of LVS, with the director also functioning as a realserver, but this doesn’t demonstrate how to scale up an LVS farm, and you may have difficulty telling whether it is working if you’re new to LVS.

The director connects to all boxes. If you have only one keyboard and monitor, you can set everything up from the director.

You need

Client

Any machine, any OS, with a telnet client and/or an http client. ( e.g. netscape, lynx, IE). (I used a Mac client for my first LVS).

Director:

machine running Linux kernel 2.2.x (x>=14) or 2.4.x patched with ipvs. If you’re starting from scratch, use a 2.4.x kernel, as ipchains (as is used in 2.2.x kernels) is no longer being developed, having been replaced by iptables.

Realserver(s):

These are the machines offering the service of interest (here telnet and/or http). In production environments these can have any operating system, but for convenience I will discuss the case where they are linux machines with kernel >=2.2.14. (Support for earlier kernels has been dropped from the HOWTO).

The director can forward packets by 3 methods. The OS for the realservers can be

- LVS-NAT - any machine, any OS, running some service of interest (eg httpd, ftpd, telnetd, smtp, nntp, dns, daytime …). The realserver just has to have a tcpip stack - even a network printer will do.

- LVS-DR - OS known to work are listed in the HOWTO: almost all unices and Microsoft OS.

- LVS-Tun - realserver needs to run an OS that can tunnel (only Linux so far).

Relative Terms

LB (Load Balancer )

HA (High Available )

Failover

Cluster

LVS (Linux Virtual Server Linux)

DS (Director Server)

RS (Real Server)

VIP (Virtual IP),The director presents an IP called the Virtual IP (VIP) to clients.

DIP (Director IP)

RIP (Real Server IP)

CIP (Client IP)

The comparison of VS/NAT, VS/TUN and VS/DR

| VS/NAT | VS/TUN | VS/DR | |

|---|---|---|---|

| server | any | tunneling | non-arp device |

| server network | private | LAN/WAN | LAN |

| server number | low (10~20) | high | high |

| server gateway | load balancer | own router | own router |

Virtual Server via NAT

The advantage of the virtual server via NAT is that real servers can run any operating system that supports TCP/IP protocol, real servers can use private Internet addresses, and only an IP address is needed for the load balancer.

The disadvantage is that the scalability of the virtual server via NAT is limited. The load balancer may be a bottleneck of the whole system when the number of server nodes (general PC servers) increase to around 20 or more, because both the request packets and response packets are need to be rewritten by the load balancer. Supposing the average length of TCP packets is 536 Bytes, the average delay of rewriting a packet is around 60us (on Pentium processor, this can be reduced a little by using of higher processor), the maximum throughput of the load balancer is 8.93 MBytes/s. Assuming the average throughput of real servers is 400Kbytes/s, the load balancer can schedule 22 real servers.

Virtual server via NAT can meet the performance request of many servers. Even when the load balancer is becoming a bottleneck of the whole system, there are two methods to solve it, one is the hybrid approach, and the other is the virtual server via IP tunneling or virtual server via direct routing. In the DNS hybrid approach, there are many load balancers who all have their own server clusters, and the load balancers are grouped at a single domain name by Round-Round DNS. You can try to use VS-Tunneling or VS-DRouting for good scalability, you can also try the nested VS load balancers approach, the first front-end is the VS-Tunneling or VS-DRouting load balancer, the second layer is many VS-NAT load balancers, which all have their own clusters.

Virtual Server via IP Tunneling

In the virtual server via NAT, request and response packets all need to pass through the load balancer, the load balancer may be a new bottleneck when the number of server nodes increase to 20 or more, because the throughput of the network interface is limited eventually. We can see from many Internet services (such as web service) that the request packets are often short and response packets usually have large amount of data.

In the virtual server via IP tunneling, the load balancer just schedules requests to the different real servers, and the real servers return replies directly to the users. So, the load balancer can handle huge amount of requests, it may schedule over 100 real servers, and it won’t be the bottleneck of the system. :-) Thus using IP tunneling will greatly increase the maximum number of server nodes for a load balancer. The maximum throughput of the virtual server can reach over 1Gbps, even if the load balancer just has 100Mbps full-duplex network adapter.

The IP tunneling feature can be used to build a very high-performance virtual server. It is extremely good to build a virtual proxy server, because when the proxy servers get request, it can access the Internet directly to fetch objects and return them directly to the users.

However, all servers must have “IP Tunneling”(IP Encapsulation) protocol enabled, I just tested it on Linux IP tunneling. If you make virtual server work on servers running other operating systems with IP tunneling, please let me know, I will be glad to hear that.

Virtual Server via Direct Routing

Like in the virtual server via tunneling approach, LinuxDirector processes only the client-to-server half of a connection in the virtual server via direct routing, and the response packets can follow separate network routes to the clients. This can greatly increase the scalability of virtual server.

Compared to the virtual server via IP tunneling approach, this approach doesn’t have tunneling overhead(In fact, this overhead is minimal in most situations), but requires that one of the load balancer’s interfaces and the real servers’ interfaces must be in the same physical segment.

LVS-NAT setup

DS (Director Server)

# Install keepalived

# Ubuntu

apt-get install keepalived ipvsadm

# CentOS

yum install keepalived ipvsadm

# update iptables

vim /etc/sysconfig/iptables

# For keepalived:

# allow vrrp

-A INPUT -p vrrp -j ACCEPT

-A INPUT -p igmp -j ACCEPT

# allow multicast

-A INPUT -d 224.0.0.18 -j ACCEPT

# reload iptables

service iptables reload

# open ip_forward

echo "1" > /proc/sys/net/ipv4/ip_forward

# edit sysctl.conf

vi /etc/sysctl.conf

net.ipv4.ip_forward = 1

sysctl -p

# keepalived for lvs-nat

vim /etc/keepalived/keepalived.conf

vrrp_sync_group NC-MAIN-API {

group {

NC-MAIN-API-PUB

}

}

vrrp_instance NC-MAIN-API-PUB {

state BACKUP

interface bond1

virtual_router_id 222

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

xx.xx.xx.xx/25 dev bond1

}

}

virtual_server xx.xx.xx.xx 15000 {

delay_loop 6

lb_algo rr

lb_kind NAT

protocol TCP

real_server 10.71.12.69 15000 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 15000

}

}

real_server 10.71.12.76 15000 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 15000

}

}

}

# enable and start keepalived

systemctl start keepalived

systemctl enable keepalived

watch ipvsadm -L -n --stats

RS (Real Server)

change the default gateway to DS (Director Server) VIP

LVS-DR setup

DS (Director Server)

# Install keepalived

# Ubuntu

apt-get install keepalived ipvsadm

# CentOS

yum install keepalived ipvsadm

# update iptables

vim /etc/sysconfig/iptables

# For keepalived:

# allow vrrp

-A INPUT -p vrrp -j ACCEPT

-A INPUT -p igmp -j ACCEPT

# allow multicast

-A INPUT -d 224.0.0.18 -j ACCEPT

# reload iptables

service iptables reload

# open ip_forward

echo "1" > /proc/sys/net/ipv4/ip_forward

# edit sysctl.conf

vi /etc/sysctl.conf

net.ipv4.ip_forward = 1

sysctl -p

# keepalived for lvs-dr

vim /etc/keepalived/keepalived.conf

vrrp_sync_group GOP {

group {

VI_PRI_CONNECT

VI_PRI_AUTH

}

}

vrrp_instance VI_PRI_CONNECT {

state BACKUP

interface bond0

virtual_router_id 128

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.65.32.28/23 dev bond0

}

}

virtual_server 10.65.32.28 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.65.32.13 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 10.65.32.14 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

virtual_server 10.65.32.28 443 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.65.32.13 443 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 443

}

}

real_server 10.65.32.14 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 443

}

}

}

vrrp_instance VI_PRI_AUTH {

state BACKUP

interface bond0

virtual_router_id 129

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.65.32.29/23 dev bond0

}

}

virtual_server 10.65.32.29 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.65.32.22 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 110.65.32.23 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

virtual_server 10.65.32.29 443 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.65.32.22 443 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 443

}

}

real_server 110.65.32.23 443 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 443

}

}

}

# enable and start keepalived

systemctl start keepalived

systemctl enable keepalived

watch ipvsadm -L -n --stats

RS (Real Server)

- Edit “/etc/sysconfig/network-scripts/ifcfg-lo” to patch bug in Centos 7 (if using Centos 7). Add TYPE=Loopback to the file.

- Add loopback for each Virtual IP on each worker. E.g. first virtual IP create file “/etc/sysconfig/network-scripts/ifcfg-lo:0”.

- Start adapters if not yet started

# add TYPE=Loopback

echo "TYPE=Loopback" >> /etc/sysconfig/network-scripts/ifcfg-lo

# add ifcfg-lo:0

cat > /etc/sysconfig/network-scripts/ifcfg-lo:0 << EOF

DEVICE=lo:0

IPADDR=10.65.32.28

NETMASK=255.255.255.255

ONBOOT=yes

EOF

# ifup lo:0

ifup lo:0

# add real_start

cat > /root/real_start.sh << EOF

#!/bin/bash

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

EOF

# chmod 755

chmod 755 /root/real_start.sh

# add real.service

cat > /usr/lib/systemd/system/real.service << EOF

[Unit]

Description=autostart lvs real

After=network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

ExecStart=/root/real_start.sh

[Install]

WantedBy=multi-user.target

EOF

# enable service

systemctl enable real.service

# lvs real server example

vim /root/lvs_real.sh

#!/bin/bash

### BEGIN INIT INFO

# Provides:

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Start realserver

# Description: Start realserver

### END INIT INFO

# change the VIP to proper value

VIP=10.65.32.28

case "$1" in

start)

echo "Start REAL Server"

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

stop)

/sbin/ifconfig lo:0 down

echo "Stop REAL Server"

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

;;

restart)

$0 stop

$0 start

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

;;

esac

exit 0

LVS-TUN setup

DS (Director Server)

# Install keepalived

# Ubuntu

apt-get install keepalived ipvsadm

# CentOS

yum install keepalived ipvsadm

# update iptables

vim /etc/sysconfig/iptables

# For keepalived:

# allow vrrp

-A INPUT -p vrrp -j ACCEPT

-A INPUT -p igmp -j ACCEPT

# allow multicast

-A INPUT -d 224.0.0.18 -j ACCEPT

# reload iptables

service iptables reload

# open ip_forward

echo "1" > /proc/sys/net/ipv4/ip_forward

# edit sysctl.conf

vi /etc/sysctl.conf

net.ipv4.ip_forward = 1

sysctl -p

# keepalived for lvs-tun

vim /etc/keepalived/keepalived.conf

vrrp_sync_group GOP {

group {

VI_PRI_AUTH

}

}

vrrp_instance VI_PRI_AUTH {

state BACKUP

interface em1

virtual_router_id 11

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.10.36.11/23 dev em1

}

}

virtual_server 10.10.36.11 80 {

delay_loop 6

lb_algo rr

lb_kind TUN

protocol TCP

real_server 10.10.36.4 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 10.10.36.7 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

# enable and start keepalived

systemctl start keepalived

systemctl enable keepalived

watch ipvsadm -L -n --stats

# write script, recommand manage it by keepalived.conf

#!/bin/sh

# Startup script handle the initialisation of LVS

# chkconfig: - 28 72

# description: Initialise the Linux Virtual Server for TUN

#

### BEGIN INIT INFO

# Provides: ipvsadm

# Required-Start: $local_fs $network $named

# Required-Stop: $local_fs $remote_fs $network

# Short-Description: Initialise the Linux Virtual Server

# Description: The Linux Virtual Server is a highly scalable and highly

# available server built on a cluster of real servers, with the load

# balancer running on Linux.

# description: start LVS of TUN

LOCK=/var/lock/lvs-tun.lock

VIP=10.10.36.11

RIP1=10.10.36.4

RIP2=10.10.36.7

. /etc/rc.d/init.d/functions

start() {

PID=`ipvsadm -Ln | grep ${VIP} | wc -l`

if [ $PID -gt 0 ];

then

echo "The LVS-TUN Server is already running !"

else

#Load the tun mod

/sbin/modprobe tun

/sbin/modprobe ipip

#Set the tun Virtual IP Address

/sbin/ifconfig tunl0 $VIP broadcast $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev tunl0

#Clear IPVS Table

/sbin/ipvsadm -C

#The icmp recruit setting

echo "0" >/proc/sys/net/ipv4/ip_forward

echo "0" >/proc/sys/net/ipv4/conf/all/send_redirects

echo "0" >/proc/sys/net/ipv4/conf/default/send_redirects

#Set Lvs

/sbin/ipvsadm -At $VIP:80 -s rr

/sbin/ipvsadm -at $VIP:80 -r $RIP1:80 -i -w 1

/sbin/ipvsadm -at $VIP:80 -r $RIP2:80 -i -w 1

/bin/touch $LOCK

#Run Lvs

echo "starting LVS-TUN-DIR Server is ok !"

fi

}

stop() {

#stop Lvs server

/sbin/ipvsadm -C

/sbin/ifconfig tunl0 down >/dev/null

#Remove the tun mod

/sbin/modprobe -r tun

/sbin/modprobe -r ipip

rm -rf $LOCK

echo "stopping LVS-TUN-DIR server is ok !"

}

status() {

if [ -e $LOCK ];

then

echo "The LVS-TUN Server is already running !"

else

echo "The LVS-TUN Server is not running !"

fi

}

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

sleep 1

start

;;

status)

status

;;

*)

echo "Usage: $1 {start|stop|restart|status}"

exit 1

esac

exit 0

RS (Real Server)

# check ipip module

modprobe ipip

# add VIP

# ifconfig tunl0 down

ifconfig tunl0 10.10.36.11 broadcast 10.10.36.11 netmask 255.255.255.255 up

# add route

route add -host 10.10.36.11 tunl0

# shudown ARP

echo '1' > /proc/sys/net/ipv4/conf/tunl0/arp_ignore

echo '2' > /proc/sys/net/ipv4/conf/tunl0/arp_announce

echo '1' > /proc/sys/net/ipv4/conf/all/arp_ignore

echo '2' > /proc/sys/net/ipv4/conf/all/arp_announce

echo '0' > /proc/sys/net/ipv4/conf/tunl0/rp_filter

echo '0' > /proc/sys/net/ipv4/conf/all/rp_filter

# iptables allow ipip

iptables -I INPUT 1 -p 4 -j ACCEPT

vim /etc/sysconfig/iptables

-A INPUT -p ipv4 -j ACCEPT

# write script

vim /etc/init.d/lvs-tun

#!/bin/sh

#

# Startup script handle the initialisation of LVS

# chkconfig: - 28 72

# description: Initialise the Linux Virtual Server for TUN

#

### BEGIN INIT INFO

# Provides: ipvsadm

# Required-Start: $local_fs $network $named

# Required-Stop: $local_fs $remote_fs $network

# Short-Description: Initialise the Linux Virtual Server

# Description: The Linux Virtual Server is a highly scalable and highly

# available server built on a cluster of real servers, with the load

# balancer running on Linux.

# description: start LVS of TUN-RIP

LOCK=/var/lock/ipvsadm.lock

VIP=10.10.36.11

. /etc/rc.d/init.d/functions

start() {

PID=`ifconfig | grep tunl0 | wc -l`

if [ $PID -ne 0 ];

then

echo "The LVS-TUN-RIP Server is already running !"

else

#Load the tun mod

/sbin/modprobe tun

/sbin/modprobe ipip

#Set the tun Virtual IP Address

/sbin/ifconfig tunl0 $VIP netmask 255.255.255.255 broadcast $VIP up

/sbin/route add -host $VIP dev tunl0

echo "1" >/proc/sys/net/ipv4/conf/tunl0/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/tunl0/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "0" > /proc/sys/net/ipv4/conf/tunl0/rp_filter

echo "0" > /proc/sys/net/ipv4/conf/all/rp_filter

/bin/touch $LOCK

echo "starting LVS-TUN-RIP server is ok !"

fi

}

stop() {

/sbin/ifconfig tunl0 down

echo "0" >/proc/sys/net/ipv4/conf/tunl0/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/tunl0/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

#Remove the tun mod

/sbin/modprobe -r tun

/sbin/modprobe -r ipip

rm -rf $LOCK

echo "stopping LVS-TUN-RIP server is ok !"

}

status() {

if [ -e $LOCK ];

then

echo "The LVS-TUN-RIP Server is already running !"

else

echo "The LVS-TUN-RIP Server is not running !"

fi

}

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

start

;;

status)

status

;;

*)

echo "Usage: $1 {start|stop|restart|status}"

exit 1

esac

exit 0

# start lvs-tun

chmod 755 /etc/init.d/lvs-tun

service lvs-tun start

chkconfig lvs-tun on

# Nginx test

echo "rs1" > /usr/share/nginx/html/index.html

echo "rs2" > /usr/share/nginx/html/index.html

for i in {1..100}; do curl 10.10.36.11; sleep 0.5; done

LVS-DR and LVS-TUN

DS (Director Server)

# 3 models

[packet-forwarding-method]

-g, --gatewaying Use gatewaying (direct routing). This is the default.

-i, --ipip Use ipip encapsulation (tunneling).

-m, --masquerading Use masquerading (network access translation, or NAT).

Note: Regardless of the packet-forwarding mechanism specified, real servers for addresses for which there are interfaces on the local node will be use the

local forwarding method, then packets for the servers will be passed to upper layer on the local node. This cannot be specified by ipvsadm, rather it set by

the kernel as real servers are added or modified.

# ipvsadm

/sbin/ifconfig tunl0 10.10.36.11 broadcast 10.10.36.11 netmask 255.255.255.255 up

/sbin/route add -host 10.10.36.11 dev tunl0

/sbin/ipvsadm -At 10.10.36.11:80 -s rr

/sbin/ipvsadm -at 10.10.36.11:80 -r 10.10.36.4:80 -g -w 1

/sbin/ipvsadm -at 10.10.36.11:80 -r 10.10.36.7:80 -i -w 1

# keepalived.conf

vrrp_sync_group GOP {

group {

VI_PRI_AUTH

}

}

vrrp_instance VI_PRI_AUTH {

state BACKUP

interface em1

virtual_router_id 11

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.10.36.11/23 dev em1

}

}

virtual_server 10.10.36.11 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.10.36.4 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

virtual_server 10.10.36.11 80 {

delay_loop 6

lb_algo rr

lb_kind TUN

protocol TCP

real_server 10.10.36.7 80 {

weight 100

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

# check result

[root@d126027 wangao]# for i in {1..100}; do curl 10.10.36.11; sleep 0.5; done

rs2

rs1

rs2

rs1

rs2

[root@d126009 keepalived]# ipvsadm -Ln --stats

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Conns InPkts OutPkts InBytes OutBytes

-> RemoteAddress:Port

TCP 10.10.36.11:80 100 700 0 36700 0

-> 10.10.36.4:80 50 350 0 18350 0

-> 10.10.36.7:80 50 350 0 18350 0

[root@d126009 wangao]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.10.36.11:80 rr

-> 10.10.36.4:80 Route 100 0 0

-> 10.10.36.7:80 Tunnel 100 0 0

RS (Real Server)

The same like LVS-DR or LVS-TUN RS (Real Server) configuration

Reference

The LVS/NAT working principle and configuration instructions.

The LVS/DR working principle and configuration instructions.

The LVS/TUN working principle and configuration instructions.